本文将介绍利用MediaExtractor和MediaCodec来解码音频,然后和其他音频实现混音,再编码成需要的音频格式。

相关源码: https://github.com/YeDaxia/MusicPlus

认识数字音频

在实现之前,我们先来了解一下数字音频的有关属性。

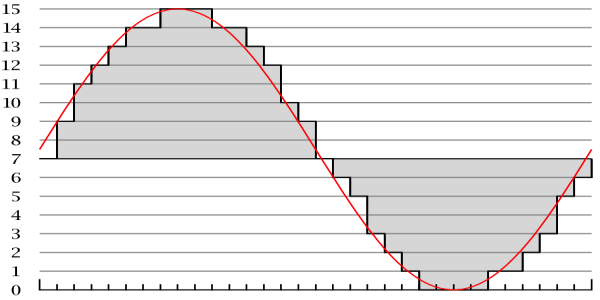

采样频率(Sample Rate):每秒采集声音的数量,它用赫兹(Hz)来表示。(采样率越高越靠近原声音的波形)

采样精度(Bit Depth):指记录声音的动态范围,它以位(Bit)为单位。(声音的幅度差)

声音通道(Channel):声道数。比如左声道右声道。

采样量化后的音频最终是一串数字,声音的大小(幅度)会体现在这个每个数字数值大小上;而声音的高低(频率)和声音的音色(Timbre)都和时间维度有关,会体现在数字之间的差异上。

在编码解码之前,我们先来感受一下原始的音频数据究竟是什么样的。我们知道 wav 文件里面放的就是原始的 PCM 数据,下面我们通过 AudioTrack 来直接把这些数据 write 进去播放出来。下面是某个wav文件的格式,关于 wav 的格式内容可以看: WaveFormat ,可以通过 Binary Viewer 等工具去查看一下 wav 文件的二进制内容。

下面这段代码可用于播放原生wav文件,请用它来试着播放一段 wav 格式的音频:

1 | int sampleRateInHz = 44100; |

如果你有试过一下上面的例子,那你应该对音频的源数据有了一个概念了。

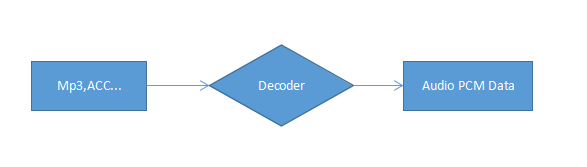

音频的解码

通过上面的介绍,我们不难知道,解码的目的就是让编码后的数据恢复成 wav 中的源数据。

下面代码将利用 MediaExtractor 和 MediaCodec 来提取编码后的音频数据并解压成音频源数据:

1 | final String encodeFile = "your encode audio file path"; |

这里解压出来的数据是不带文件的头部信息的,因此一般的播放器还不能识别播放,这里你可以用 AudioTrack 来播放验证一下这些解压后的数据是否正确。

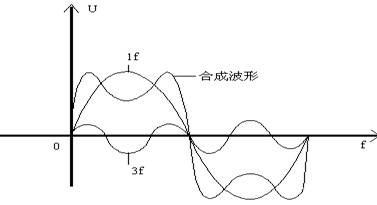

音频的混音

音频混音的原理: 量化的语音信号的叠加等价于空气中声波的叠加。

反应到音频数据上,也就是把同一个声道的数值进行简单的相加,但是这样同时会产生一个问题,那就是相加的结果可能会溢出,当然为了解决这个问题已经有很多方案了,在这里我们采用简单的平均算法(average audio mixing algorithm, 简称 V 算法)。在下面的演示程序中,我们假设音频文件是的采样率,通道和采样精度都是一样的,这样会便于处理。另外要注意的是,在源音频数据中是按照 little-endian 的顺序来排放的,PCM 值为 0 表示没声音(振幅为 0 )。

代码实现:

1 | public void mixAudios(File[] rawAudioFiles){ |

同样,你可以把混音后的数据用AudioTrack播放出来,验证一下混音的效果。

关于更多的混音算法介绍可以查看这篇论文。

音频的编码

对音频进行编码的目的用更少的空间来存储和传输,有有损编码和无损编码,其中我们常见的 Mp3 和 ACC 格式就是有损编码。在下面的例子中,我们通过 MediaCodec 来对混音后的数据进行编码,在这里,我们将采用 ACC 格式来进行。

ACC 音频有 ADIF 和 ADTS 两种格式,第一种适用于磁盘,优点是需要空间小, 但是不能边下载边播放;第二种则适用于流的传输,它是一种帧序列,可以逐帧播放。我们这里用 ADTS 这种来进行编码,首先要了解一下它的帧序列的构成:

ADTS 的帧结构:

head :: body

ADTS 帧的 Header 组成:

| Length (bits) | Description |

|---|---|

| 12 | syncword 0xFFF, all bits must be 1 |

| 1 | protection absent, Warning, set to 1 if there is no CRC and 0 if there is CRC |

| 1 | protection absent, Warning, set to 1 if there is no CRC and 0 if there is CRC |

| 2 | profile, the MPEG-4 Audio Object Type minus 1 |

| 4 | MPEG-4 Sampling Frequency Index (15 is forbidden) |

| 1 | private bit, guaranteed never to be used by MPEG, set to 0 when encoding, ignore when decoding |

| 3 | MPEG-4 Channel Configuration (in the case of 0, the channel configuration is sent via an inband PCE) |

| 1 | originality, set to 0 when encoding, ignore when decoding |

| 1 | home, set to 0 when encoding, ignore when decoding |

| 1 | copyrighted id bit, the next bit of a centrally registered copyright identifier, set to 0 when encoding, ignore when decoding |

| 1 | copyright id start, signals that this frame’s copyright id bit is the first bit of the copyright id, set to 0 when encoding, ignore when decoding |

| 13 | frame length, this value must include 7 or 9 bytes of header length: FrameLength = (ProtectionAbsent == 1 ? 7 : 9) + size(AACFrame) |

| 11 | Buffer fullness |

| 2 | Number of AAC frames (RDBs) in ADTS frame minus 1, for maximum compatibility always use 1 AAC frame per ADTS frame |

| 16 | CRC if protection absent is 0 |

我们的思路就很明确了,把编码后的每一帧数据加上header写到文件中,保存后的.acc文件应该是可以被播放器识别播放的。为了简单,我们还是假设之前生成的混音数据源的采样率是44100Hz,通道数是2,采样精度是16Bit。

下面代码把音频源数据编码成ACC格式:

1 | class AACAudioEncoder{ |

参考资料

数字音频: http://en.flossmanuals.net/pure-data/ch003_what-is-digital-audio/

WAV文件格式: http://soundfile.sapp.org/doc/WaveFormat/

ACC文件格式: http://www.cnblogs.com/caosiyang/archive/2012/07/16/2594029.html

有关Android Media编程的一些CTS: https://android.googlesource.com/platform/cts/+/jb-mr2-release/tests/tests/media/src/android/media/cts

WAV转ACC相关问题: http://stackoverflow.com/questions/18862715/how-to-generate-the-aac-adts-elementary-stream-with-android-mediacodec